Blood-brain barrier permeability prediction

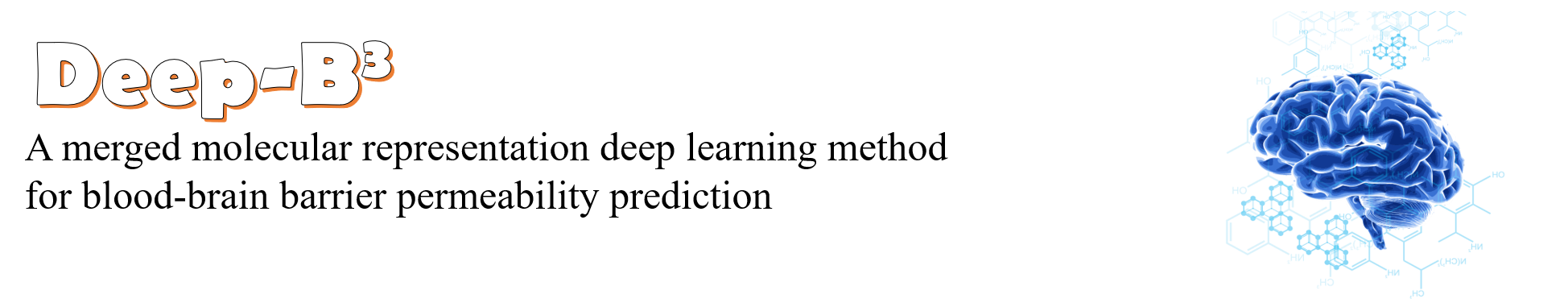

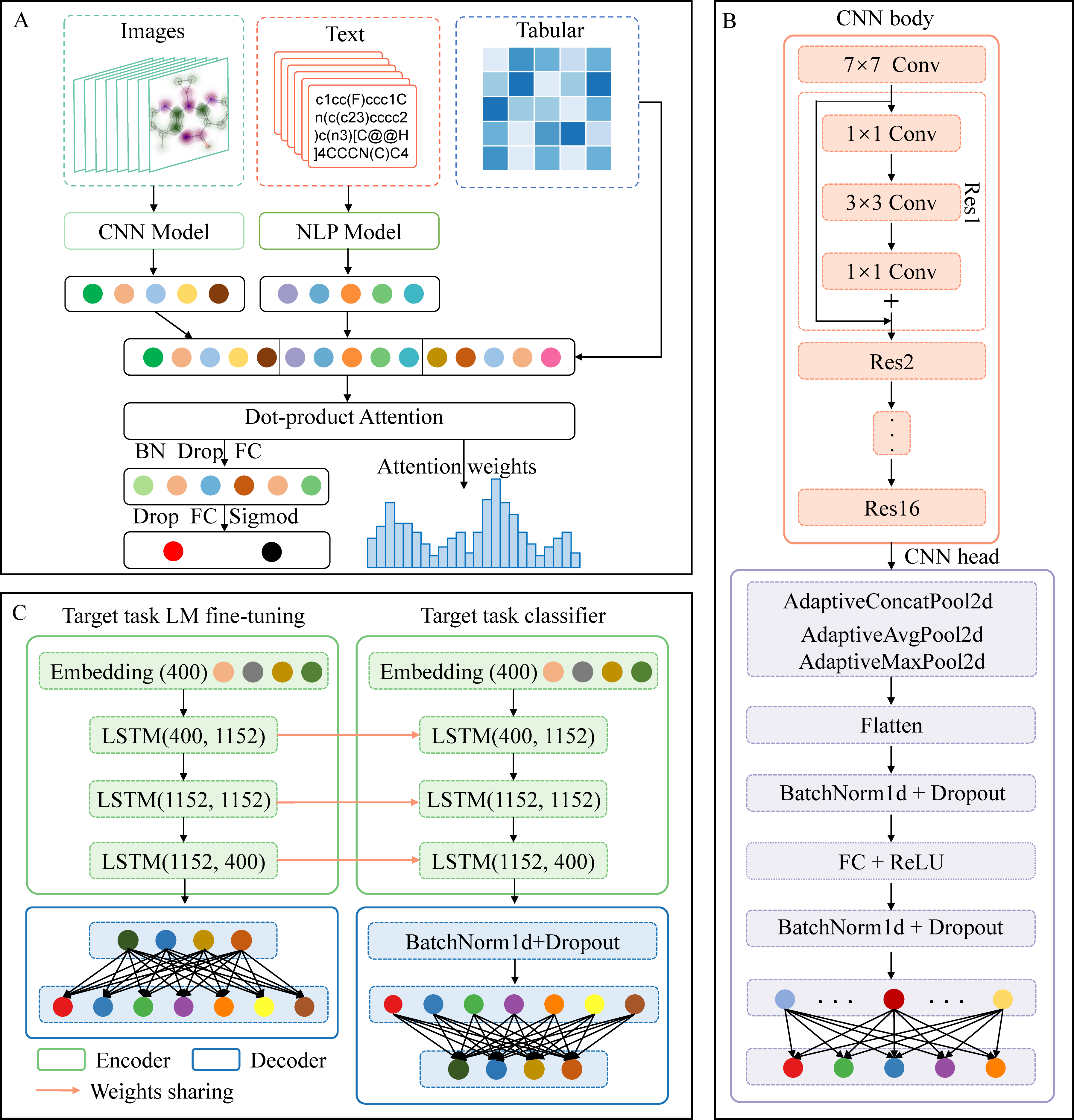

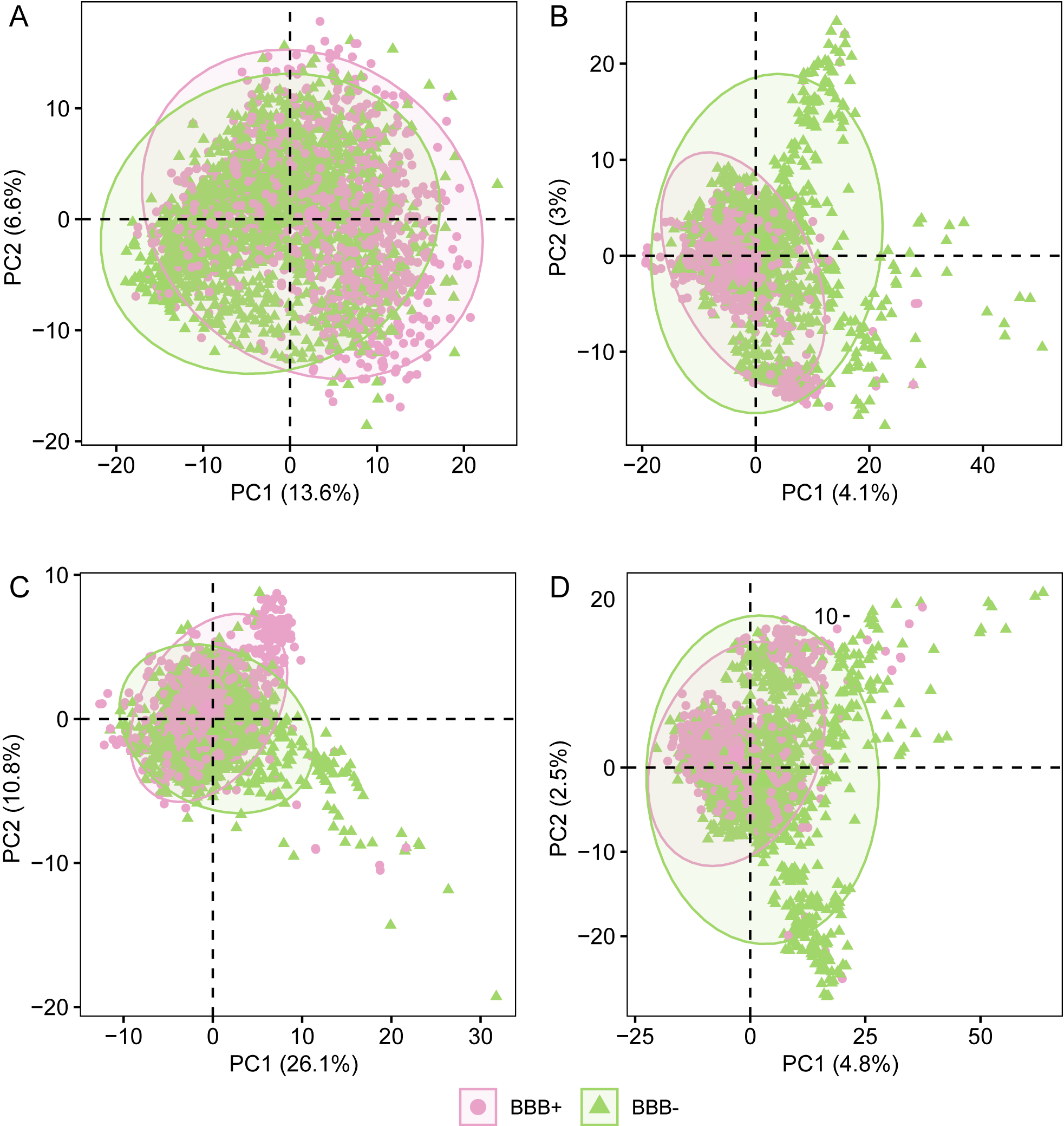

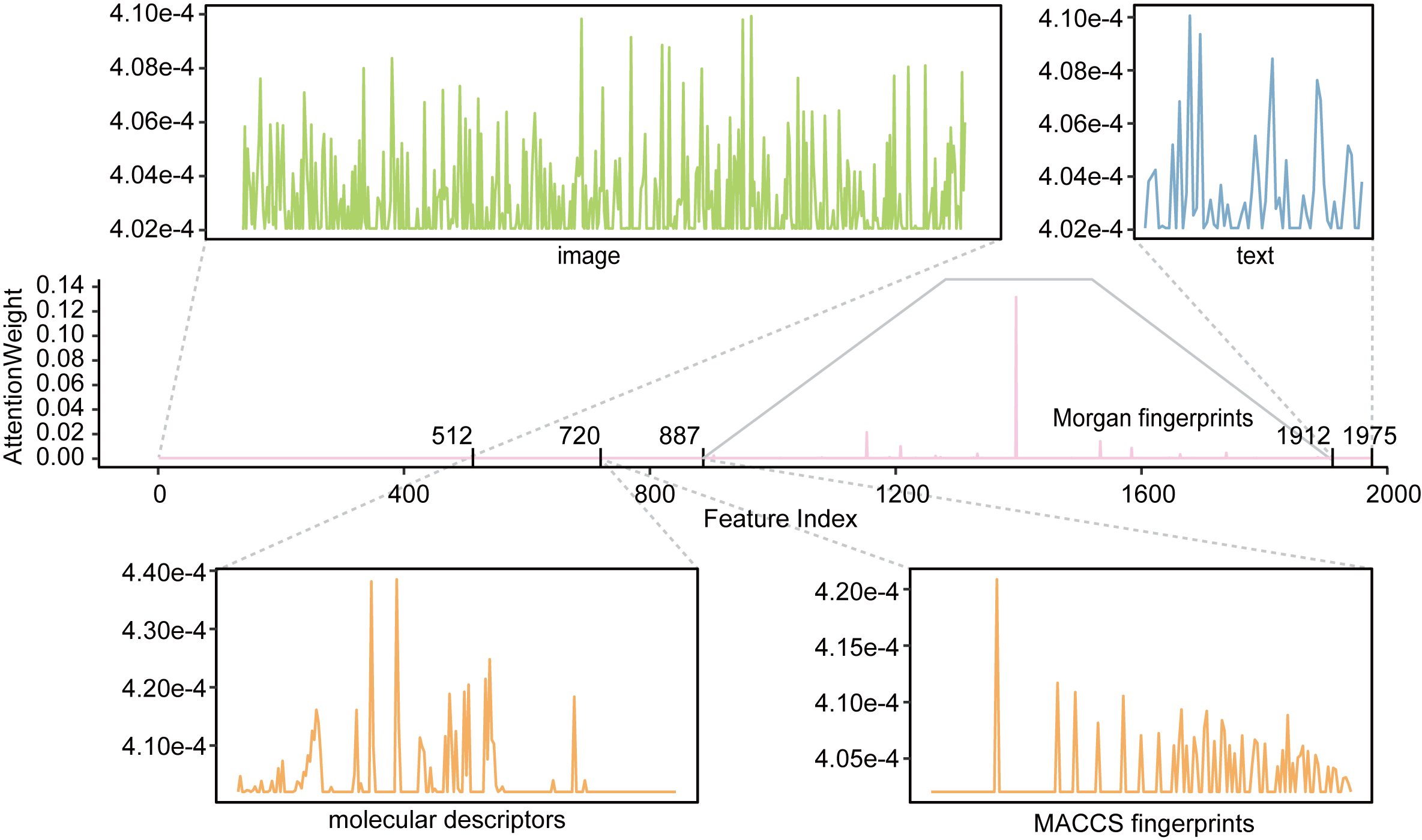

By integrating the three kinds of features, namely molecular descriptors and fingerprints, molecule graph and SMILES string, a deep learning based multi-model framework, called Deep-B3, was developed to predict the BBB permeability. The pre-trained ResNet-50 model was used to extract features from the molecular images. And pre-trained AWD-LSTM model was used to extract features from SMILES string. Subsequently, those features were concatenated with the tabular features generated based on molecular descriptors and fingerprints. In our study, there are total 1975 features fed into the attention layer, including 512 features extracted from the CNN model, 1399 tabular features and 64 features generated from the NLP model. The self-attention layer monitored the key features for predicting BBB permeability, and fed the results into a series FCs to make final predictions. The Fast.AI 1.0 was applied to build the frameworks.

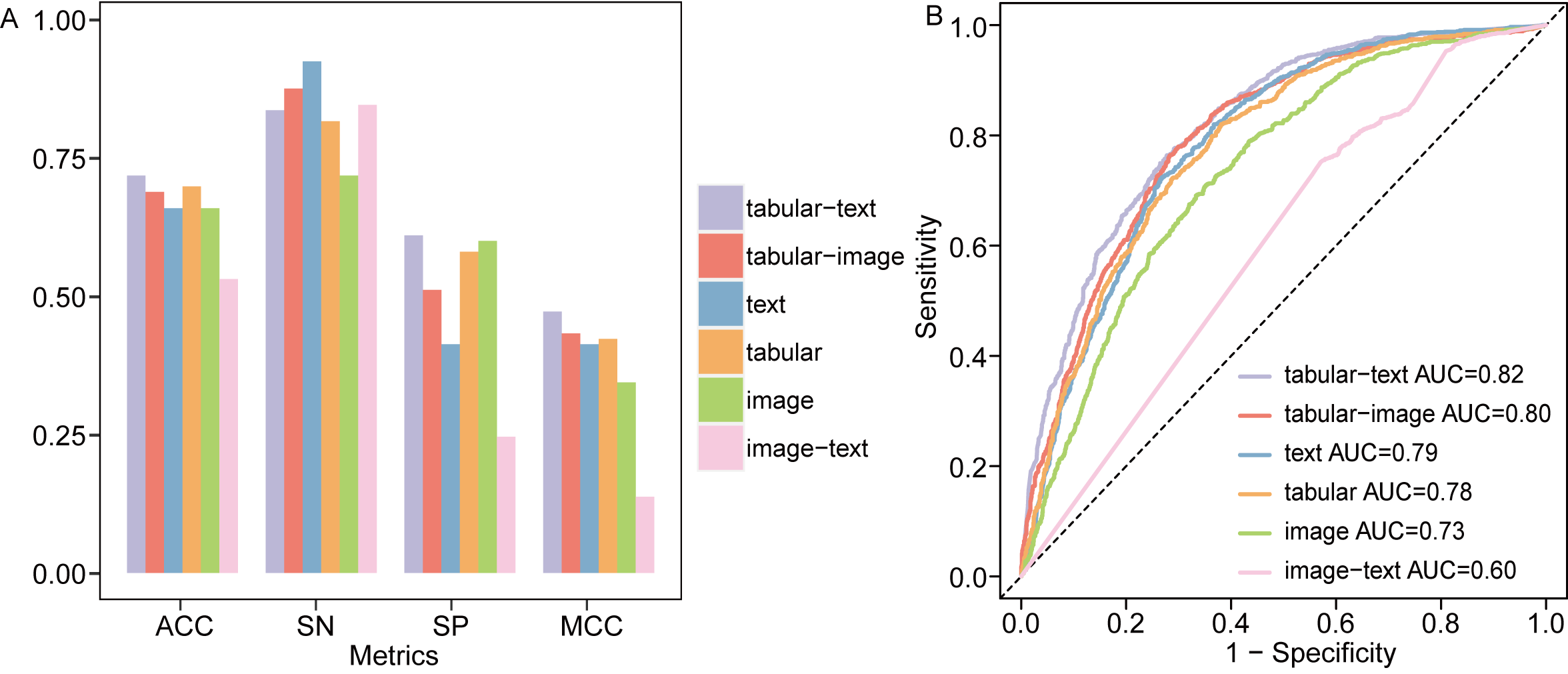

The principal component analysis (PCA) method was used to reduce the feature dimensionality in an interpretable way. The first two principal components (PCs) of the image features output from the CNN model explains 20.2% of the variation of between BBB+ and BBB-. The first two PCs of the tabular, text and concatenating features respectively explained 7.1%, 36.9% and 8.3% of the overall variation of between BBB+ and BBB-. Although some BBB+ reside in the clusters covered by BBB-, a specific cluster of BBB+ is still noticeable in the PCA results. There is an obstacle to prediction tasks in identifying BBB+, especially ones that reside in the overlapping region. Most BBB+, however, stay closer to each other to form clusters beside the major cluster, especially for the PCA results of tabular, text and concatenating features. The PCA results indicating that those features have the ability to distinguish BBB+ from the BBB-. We compared the performances of the models based on different combinations of these features in the independent dataset. Among the models based on the single features, although the models based on the image shown poor performance than others, it has the highest SP value.For the other two models, the model constructed with text features has the highest SN, and the model built on tabular features met the best ACC and AUC. For the models combinations of any two kinds of features, the models based on the image features combined with either the tabular or text features yielded the highest SN. The model trained on tabular and text features have the superior performance with 4 (SP, MCC, ACC and AUC) of the 5 parameters have the highest value. Compared with the single-feature model, the pairwise combined feature always can improve the performance of model on some of the five metrics.

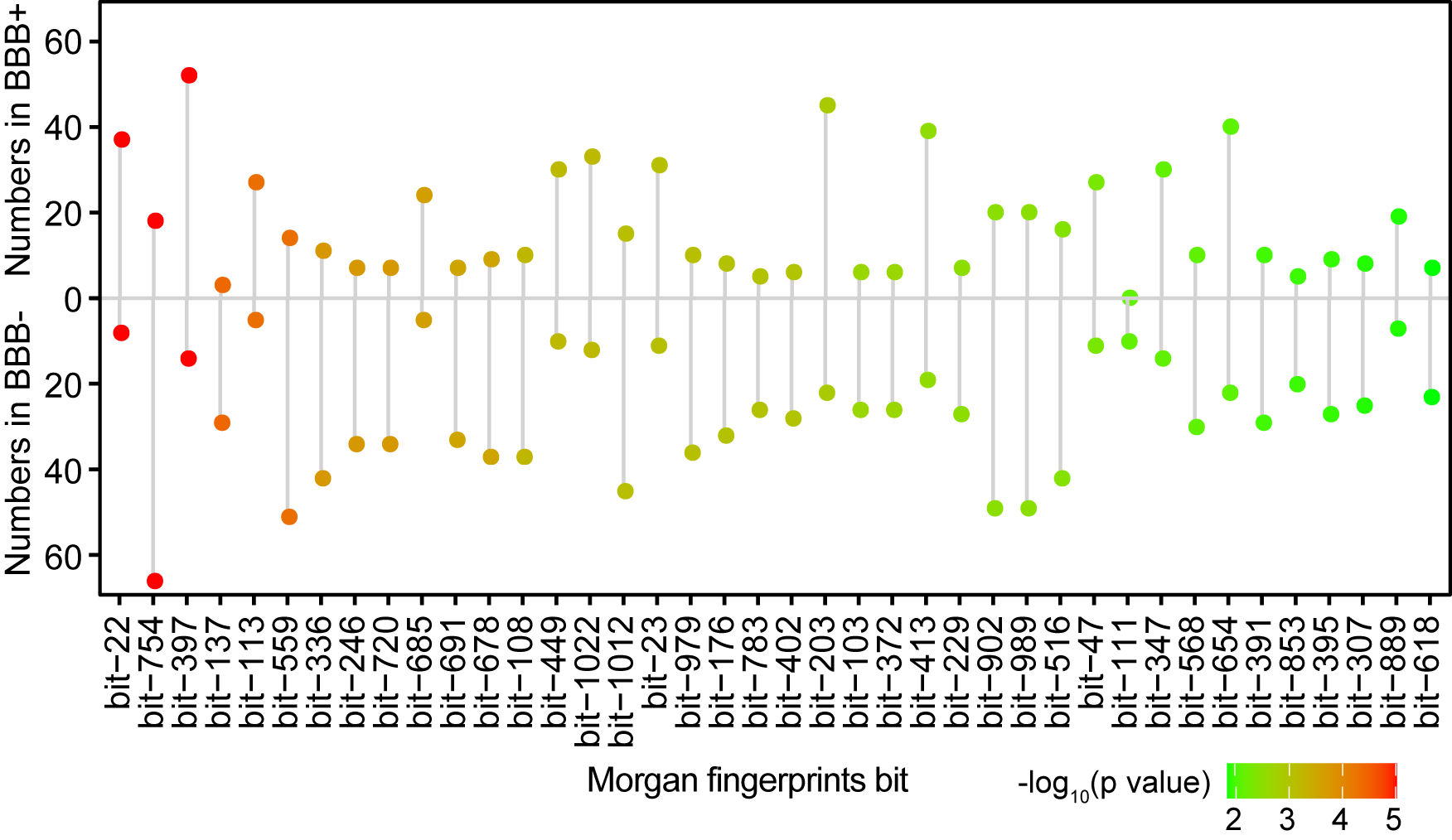

We comparative analysis on the substructural distribution between BBB+ and BBB-. According to the analytical results, the bit-22, bit-754 and bit-397 substructure differed most significantly between the BBB+ and BBB-. While the bit-22 substructure is present in over 2.94% of all BBB+ samples, it is present in 0.57% of total BBB- samples only. The portions of BBB+ molecules having bit-397 substructures are all over 4.13% of the independent testing dataset, while for BBB-, this portion is very small. On the contrary, about 4.67% of BBB- own the bit-754 substructure, while this proportion of BBB+ having this substructure is only 1.43%. Generally, the outcomes provide a descriptive summary to indicate the contribution of substructure in forecasting BBB+ with BBB-.

The attention weights were visualized to investigate the impact of different kinds of features on BBB permeability. For this aim, the feature importance was calculated by the average of the values along the attention matrix for each molecular.Morgan fingerprints of tabular features have more important for this molecule, the bit-397 mentioned in the above analysis have the highest weight in all features. In addition, the high attention weights could also be observed for the image and text features, indicating that they are important for BBB permeability prediction.